Artificial intelligence has become the defining technology of the decade, reshaping industries from healthcare to finance. Yet beneath the surface of its rapid adoption lies a growing concern: energy consumption. The newest generation of ChatGPT, celebrated for its advanced reasoning and conversational abilities, is also drawing scrutiny for its massive power demands — reportedly consuming up to 20 times more energy than its earliest version.

This stark increase has raised alarms among environmentalists, economists, and policymakers who fear that AI’s progress may be colliding with sustainability goals.

The Escalating Energy Demands of AI

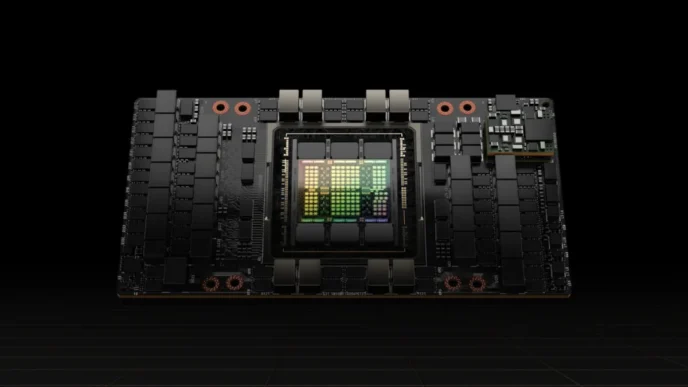

Training and running large language models (LLMs) like ChatGPT requires enormous computing resources. Each new version is larger, more capable, and significantly more power-hungry than its predecessor. While earlier iterations required impressive — but relatively modest — energy inputs, today’s models demand sprawling data centers packed with thousands of high-performance graphics processing units (GPUs).

These GPUs run continuously for weeks or even months during training phases, consuming energy on par with what entire towns use. Once deployed, every user query also consumes electricity, multiplied millions of times daily across global servers.

Industry insiders estimate that the newest ChatGPT could be 20 times more energy-intensive than the original version released just a few years ago.

The Transparency Problem

Despite mounting concerns, the energy usage of large AI systems remains opaque. AI companies, including those developing cutting-edge models, rarely disclose detailed figures on how much electricity their systems consume. Unlike traditional industries such as aviation or manufacturing, there are no regulations requiring AI firms to report environmental impact data.

This lack of transparency has left researchers relying on indirect estimates, extrapolations from data center energy use, and rough calculations based on GPU capacity. Without concrete disclosures, it is nearly impossible for policymakers or the public to grasp the full ecological footprint of AI systems.

Impact on Consumers and Electricity Costs

The growing electricity needs of AI are not confined to data centers. As demand surges, utility companies may face increasing pressure to expand capacity. This could translate into rising energy prices for households and businesses, especially in regions already struggling with strained grids.

In the U.S. alone, electricity demand from data centers is projected to double within the decade, with AI-driven services like ChatGPT a key contributor. The financial impact on consumers may become a flashpoint in debates about who should bear the costs of AI’s energy hunger.

Environmental Implications

The environmental consequences of AI’s energy consumption are equally pressing. If data centers rely heavily on fossil fuels, the carbon emissions tied to AI use could erode global progress toward climate goals. Even when powered by renewable energy, large-scale deployments strain infrastructure and require significant land, water, and material resources.

Critics warn that AI risks becoming the next major driver of tech-sector emissions, rivaling aviation or shipping in its climate impact. Without checks, the environmental cost of answering millions of ChatGPT prompts each day could far outweigh the social and economic benefits.

Can AI Be Made Sustainable?

Industry leaders and researchers are exploring ways to reduce AI’s energy footprint:

- Efficient model design: Smaller, specialized models that use fewer parameters could provide comparable results for specific tasks at a fraction of the energy cost.

- Renewable integration: Expanding the use of clean energy sources to power data centers can reduce emissions.

- Hardware innovation: Next-generation chips designed for AI workloads may process more efficiently than current GPUs.

- Transparency mandates: Requiring companies to disclose energy and carbon usage could pressure firms to prioritize sustainability.

Still, these solutions require coordination between tech firms, governments, and energy providers — a collaboration that has yet to fully materialize.

The Bigger Picture

The debate over ChatGPT’s energy use highlights a fundamental tension: society wants the benefits of AI — smarter tools, faster insights, economic growth — but is only beginning to grapple with the hidden environmental price tag.

As one expert put it, “AI doesn’t run on magic; it runs on megawatts.” Unless transparency improves and sustainable practices become central to development, the newest AI breakthroughs may leave behind a carbon legacy at odds with the future they promise to create.